It’s 11:30 p.m. Senior Roan Pennoyer is facing an 11:59 p.m. deadline for his AP English Literature essay on how literary devices in William Shakespeare’s “Othello” are employed to develop a theme regarding the relationship between manhood and honor. Unfortunately, between college applications, bungee jumping, and his six APs, he hasn’t taken the time yet to put a word on the page. Between the streams of tears of shame rolling down his face, he rattles off a few buttons into Chat GPT.

“In William Shakespeare’s Othello, the intricate interplay of literary devices serves as a masterful means to explore and illuminate the thematic complexities surrounding the relationship between manhood and honor…” and with that, the essay proceeds in a way which may be able to convince Roan’s teacher.

At the push of a button, Chat GPT’s ability to write essays out of thin air nearly instantaneously transformed how people approach writing and access information online. In a world where AI is increasingly capable of completing such tasks, high school education has had to reevaluate how to prepare students for a career in the modern world.

“I think the [response from the educational community] kind of reflects any time a new technology comes out,” said AP Government teacher Ms. Adrianna Van Wonterghem. “There is this moral scare of, ‘Is this going to corrupt my job? Is it going to ruin teaching altogether? Is it going to make plagiarism rampant?’ So there is this fear and negative reputation behind it.”

The creation of artificial intelligence that can generate content, known as Artificial General Intelligence (AGI), raises the question, “If AGI can write, is teaching writing still a valuable skill? If AGI can create artwork, why is the work of artists still of value?”

How AGI Gets Its Intelligence

For starters, AGI that utilizes Natural Language Processing (NLP), such as Chat GPT and Perplexity AI, are only as sophisticated in their responses as the material they have processed. Per the creators of Chat GPT, Open AI, Chat GPT is trained with prompts that could be asked by users.

All generative AI is created in the following way: Using the well of information available to the AI, which can range from individually selected sources to the extent of information on the internet, AI will predict what the user wants to hear or see and create its answer accordingly.

As such, if AI is given an inaccurate or incomplete source, it will lead to an inaccurate creation, shown with Google Gemini’s image AGI. This particular AI was temporarily limited in its abilities to generate people on February 22 after creating images of people of color when prompted to generate people such as the founding fathers or Nazi soldiers.

“It’s clear that we missed the mark,” said Google Vice President Prabhakar Raghavan. “Some of the images generated are inaccurate or even offensive. We’re grateful for users’ feedback and are sorry the feature didn’t work well.”

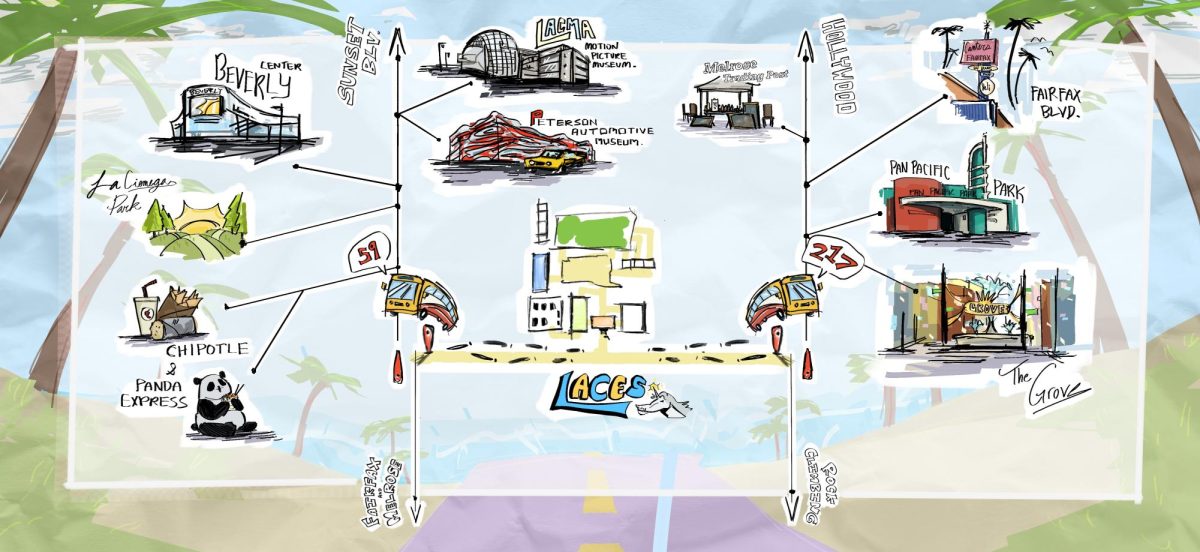

AGI Use at LACES

When students use AGI to cheat, it’s often fairly obvious for LACES teachers.

“For instance, I’ve had a few students submit 100% AI essays,” said AP English Literature teacher Ms. Audrey Hong. Even so, rather than make that argument, I actually grade as if a student wrote it, and I dissect it and rip it to shreds to show them that AI is not good at writing essays from scratch.”

“I think that it was very wordy, but had trouble finding quotes,” said an anonymous student who used artificial intelligence for homework. “It’s easy for teachers to catch it unless it’s modified after because it gets detected.”

However, some in the LACES faculty understand AGI to be a tool that can be helpful with research and gaining background knowledge.

“I think students should use [AGI] as a tool to guide their research or to frame what they’re going to write about, or just as kind of a coach alongside them, but not as a replacement for their own thoughts, which is mostly what teachers are looking for in an assignment,” said Van.

Sophomore Ibrahim Piri often codes at school. As a person who has spent time creating machine learning algorithms and deep neural networks for AI image or text recognition, he believes that while AI can assist students in developing their own ideas, it cannot replace students’ work, even in English.

“Contrary to what many people have been led to believe, Chat GPT is not a panacea for all essay-writing,” said Piri. “The essays it can generate might seem well-written, as they sound intelligent, but they often lack any actual point, and are rather lousy at best.”

When completing math and science classes, some have tried to use Chat GPT to do calculations, but it cannot do them correctly because it is a Large Language Model and its algorithm is not trained for such purposes. However, there are tools such as Math GPT which can even solve calculus problems.

“I think there’s a couple of things that are invaluable to the development of the mathematical part of the brain, which are critical thinking, pattern-seeking, number sense fluency, and recognition of patterns,” said math teacher Mr. Philip Chung. “Those things have to be done in the absence of AI because are things that could easily be supplanted by it…However, when [my students] get into the real world and they’re starting to be allowed to use AI, that’s when those problem-solving skills will come in handy so that we can tackle larger problems that are novel.”

Piri appeared to share similar sentiments regarding the role of AI for Generation Z once they enter the workforce.

“We spend so much of our time thinking about how to get AI to do things for us, and often forget to wonder about all of the things that humans can’t do, and that AI has the potential to figure out, said Piri.

However, some experts are concerned about the untested societal effects of some of the AI programs, believing that as software is tested to assure their quality, it should also be tested to predict and mitigate the societal problems they may cause.

“…Leading AI developers could establish an independent review board that would authorize whether and how to release language models, prioritizing access to independent researchers who can help assess risks and mitigation strategies, rather than speeding toward commercialization,” said Stanford professor of social ethics and technology Robert Reich.

Certain teachers, such as chemistry teacher Mr. Brian Noguchi, have encouraged their students not to use AI because their inappropriate use of the technology is not helping them learn the material.

“Students will put in the [chemistry] question or problem, and it solves it automatically for that, although [the answer] is wrong,” said Noguchi. “But I mean we definitely have seen the benefits. If students need explanations, or just looking up information, it’s a lot faster.”

Will AI make most jobs that exist today obsolete?

Teachers’ policies regarding AI are largely up to them as long as academic integrity is maintained, though LAUSD has created an Institutional Technology Initiative (ITI) which provides learning opportunities for LAUSD professionals, including teachers, so that schools can better prepare students for the future.

“I think that would be a fantastic idea [to train students to effectively use AI], but we don’t know what the future is going to look like,” said Noguchi.

Experts are unsure how AI will affect available jobs, but it is limited by AI’s inability to think abstractly. AI can process information based on the data it has been allowed to accumulate, but it cannot reason using concepts and principles that exist beyond what it is programmed to do.

In some fields, such as the entertainment industry, AI may not be able to perform the responsibilities of a job to the same level of ability but will be used to cut costs. Within the next three years, an estimated 240,000 entertainment jobs in the United States will be disrupted by AI, with 62,000 being in California, according to a CVL Economics study. Most disrupted jobs are predicted to be entry-level.

“When you’re looking at any technology that’s essentially replacing [or consolidating] a junior or entry-level role … it is harming the ecosystem,” said Nicole Hendrix, co-founder of the Concept Art Association. “What does that mean if nobody’s really entering in and the bar is now this immovable wall?”

Scientists at Stanford University, Massachusetts Institute of Technology, and Google Research have been researching if language models such as Chat GPT can train themselves through in-context learning, which would potentially transform how the informational technology field works with AI.

“I think our AI systems will also be able to do the same thing,” said Open AI CEO Sam Altman. “They’ll be able to explain to us in natural language the steps from A to B, and we can decide whether we think those are good steps, even if we’re not looking into it to see each connection.”